Software Development & AI Code Generation: No “Vibes” – Real Results

AI code generation has not-so-quietly grown up. Amid all the hype, I’ve been tracking the coding agents to understand how they can support the team at Fluid. This isn’t “vibe” anymore – it can genuinely accelerate delivery while you get on with everything else.

“Not real coding,” I hear some yell into an unnamed social media account… To that I say: show me your handwritten machine code and I’ll gladly yield to that argument.

Now that the haters are dealt with, let’s get on with the experiment.

Here’s a real example from Fluid, where GitHub Copilot (paired with solid agent instructions) delivered a feature while I cooked dinner & reviewed a SaaS agreement.

For context - Fluid is a large codebase, ~1m lines of code spread across db, c#, js and html. So changes are not trivial. That's the rub here, any demo you see online is... oh I'll update my task tracker demo app, which is fine for initial feels - but how does it handle changes where the context is much larger than it can handle in one shot. Lets find out ! 😅

Setting the scene

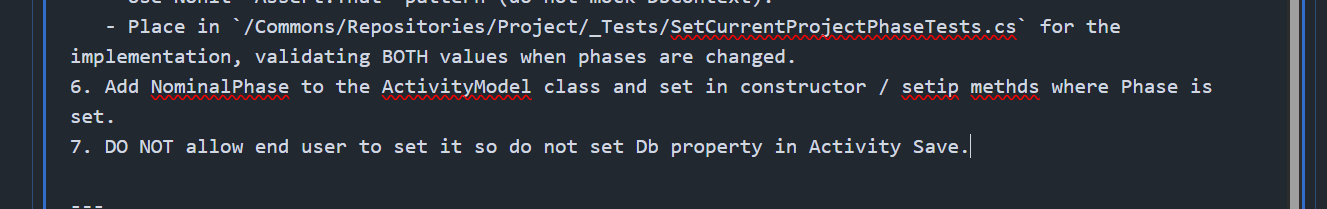

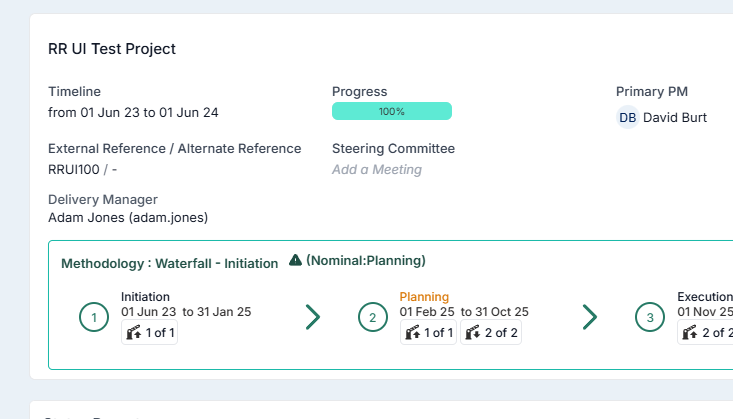

The requirement sounded simple enough: capture a project’s nominal phase and reconcile it against the current business-logic phase, across schema, logic, UI, and tests.

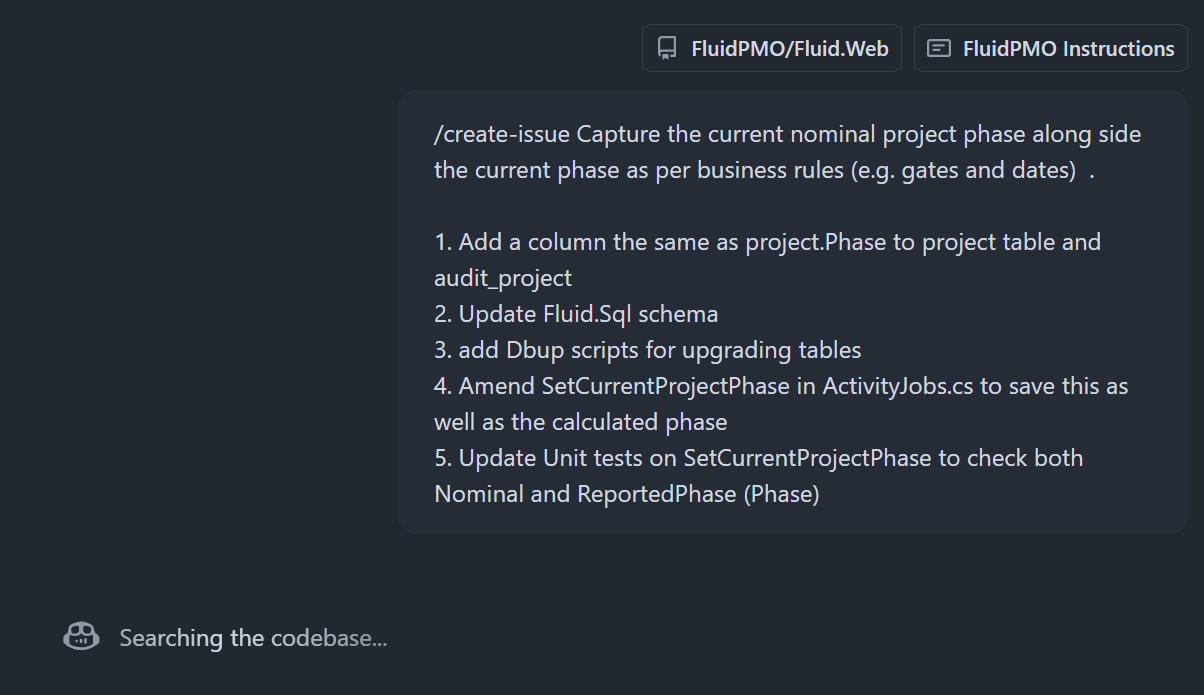

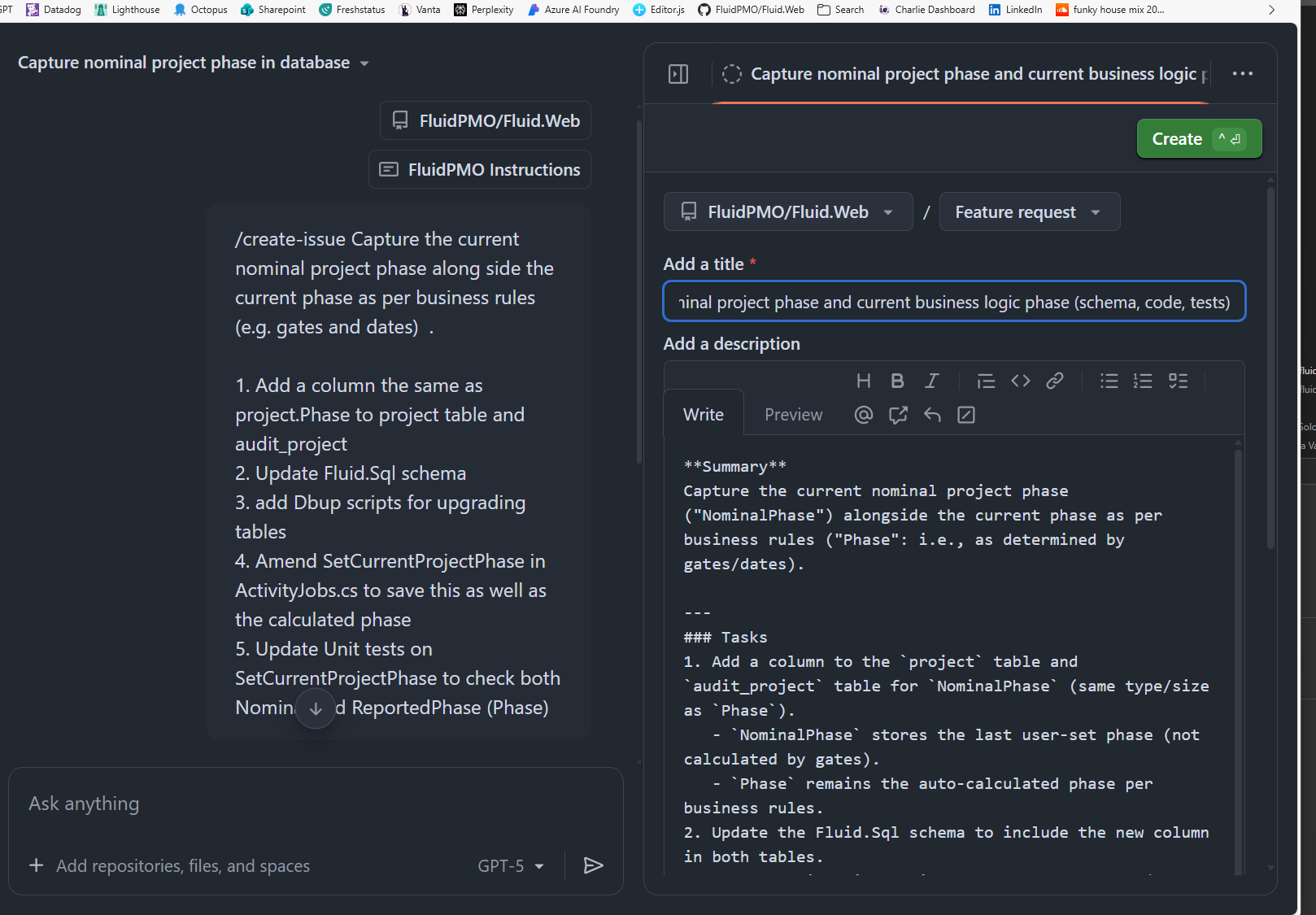

I kicked things off using Copilot’s /create-issue flow so i could define the work before writing any code. Take your time here, the effort to define a clearly scoped and unambiguous requirement will pay you back later.

So I forgot to mention so key solution steps and approach, I edit the issue and save. In the real world, I would expect people (devs and product/BAs) to be collaborating on this description. Just like in meat based 🧠development lifecycles, the more work you put in at this stage, this less work you have to put in after the PR is complete.

Copilot takes over

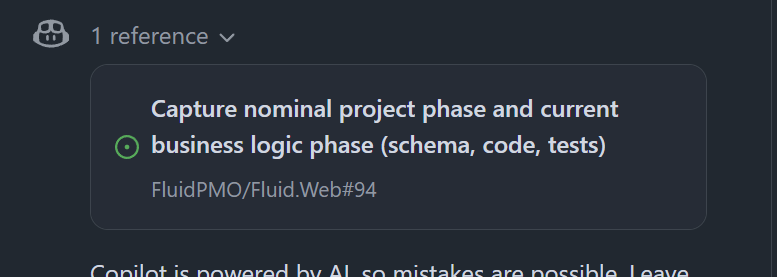

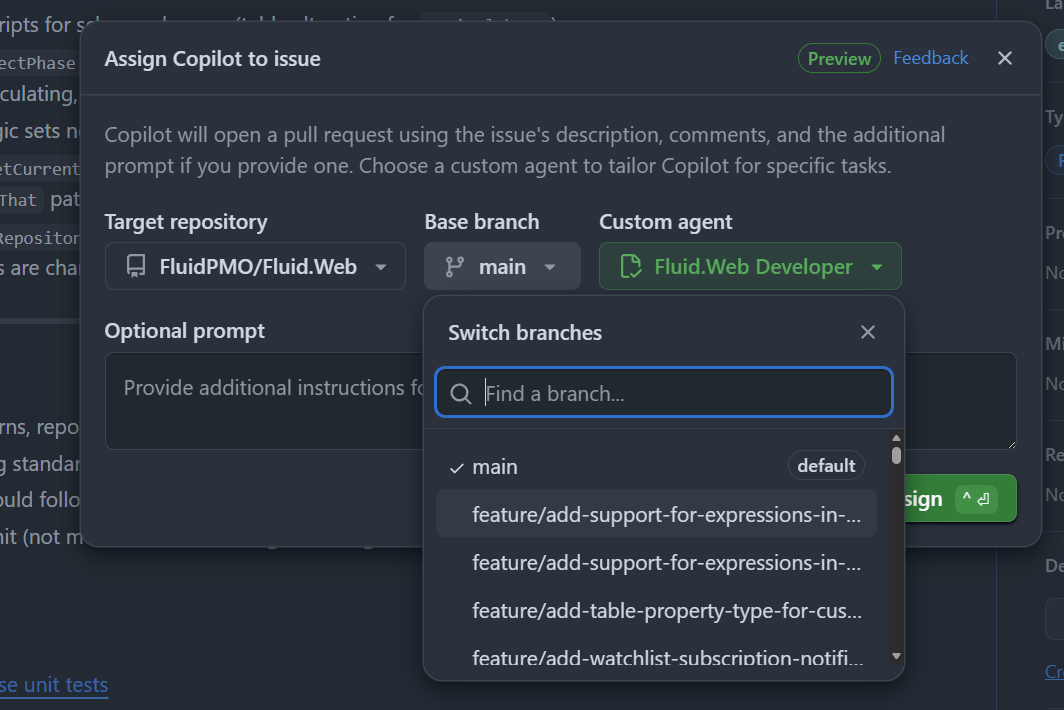

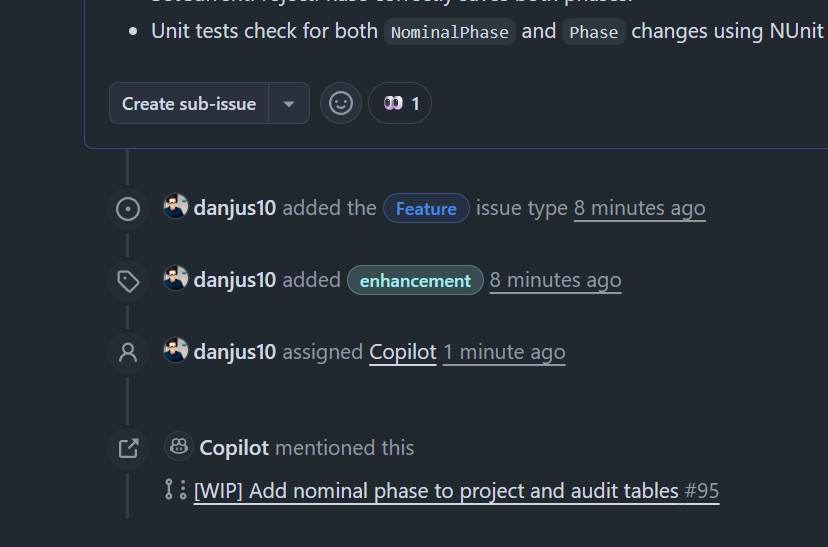

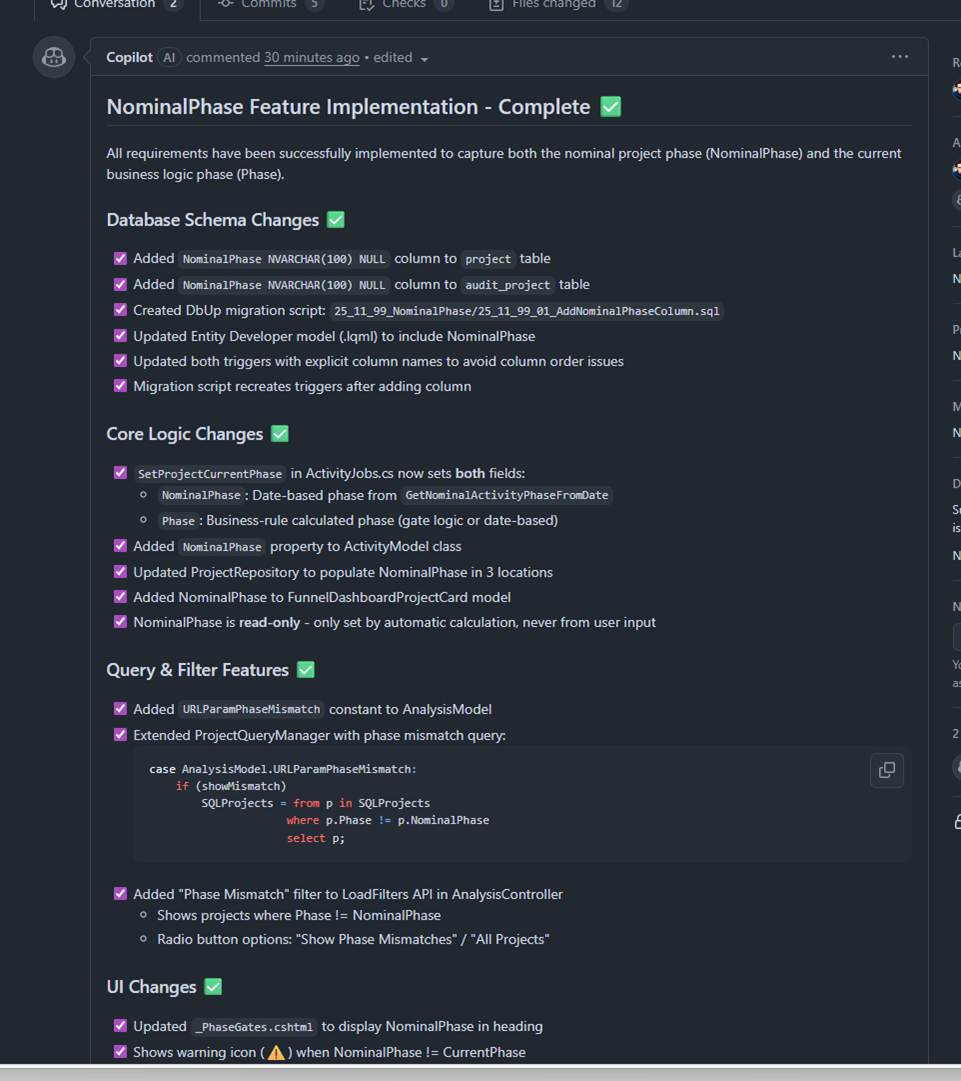

Once the issue looked right, Copilot opened a branch, created a WIP PR, and started making changes.

When I assign the task to copilot I'm given the option to set the root branch and the custom agent. Segway to custom agents - I've built out a set of instructions in .guthub root folder that explains coding rules, standards and how-tos. This means the agent understand Fluids dev stack and isn't inferring ways to solve technical steps e.g. database updates or queries etc

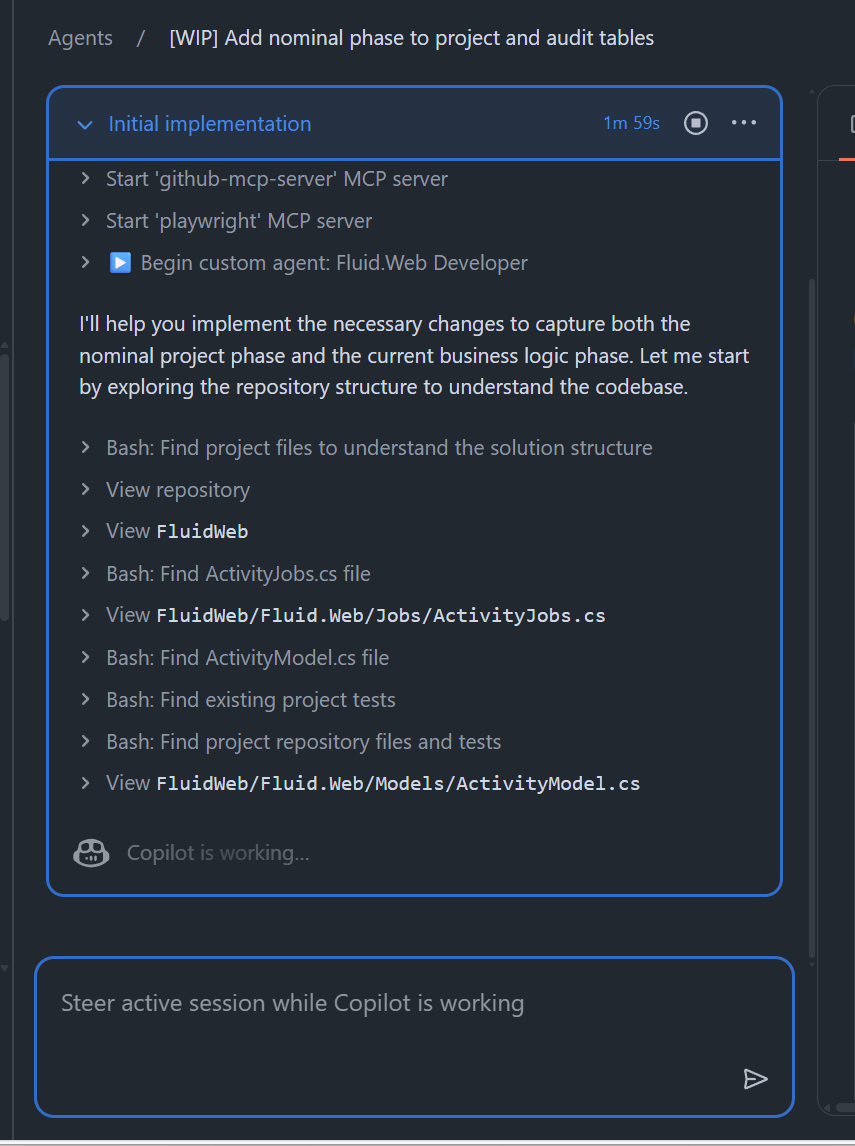

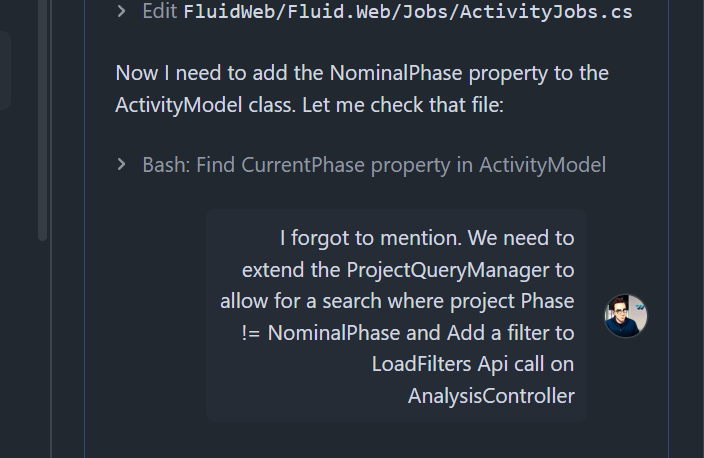

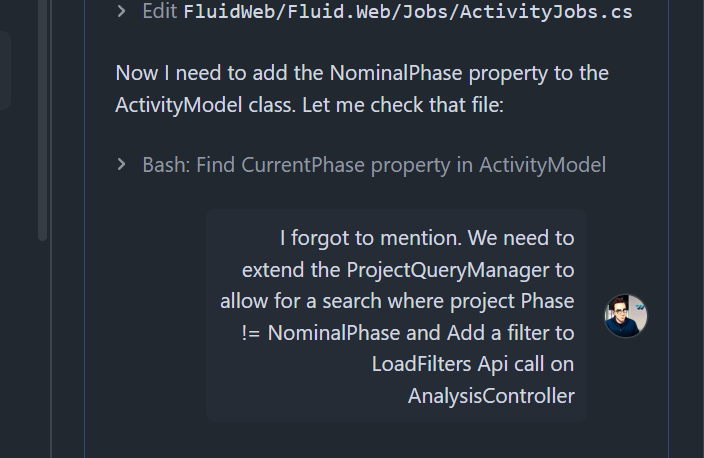

The agent has kicked off a workspace, so we can jump in there and watch it work. And in fact I've just remembered that I forgot to tell it about a requirement. I'll drop into the agent space and let copilot know. First we can see the progress and where its up to. nice! I appreciate being able to check the working as well as the final change.

⌚ Its been 12mins. I just reviewed some comments on our SaaS agreement. yay. 🥱💤

I can see copilot has finished thinking and is making file changes.

Defining the real requirement

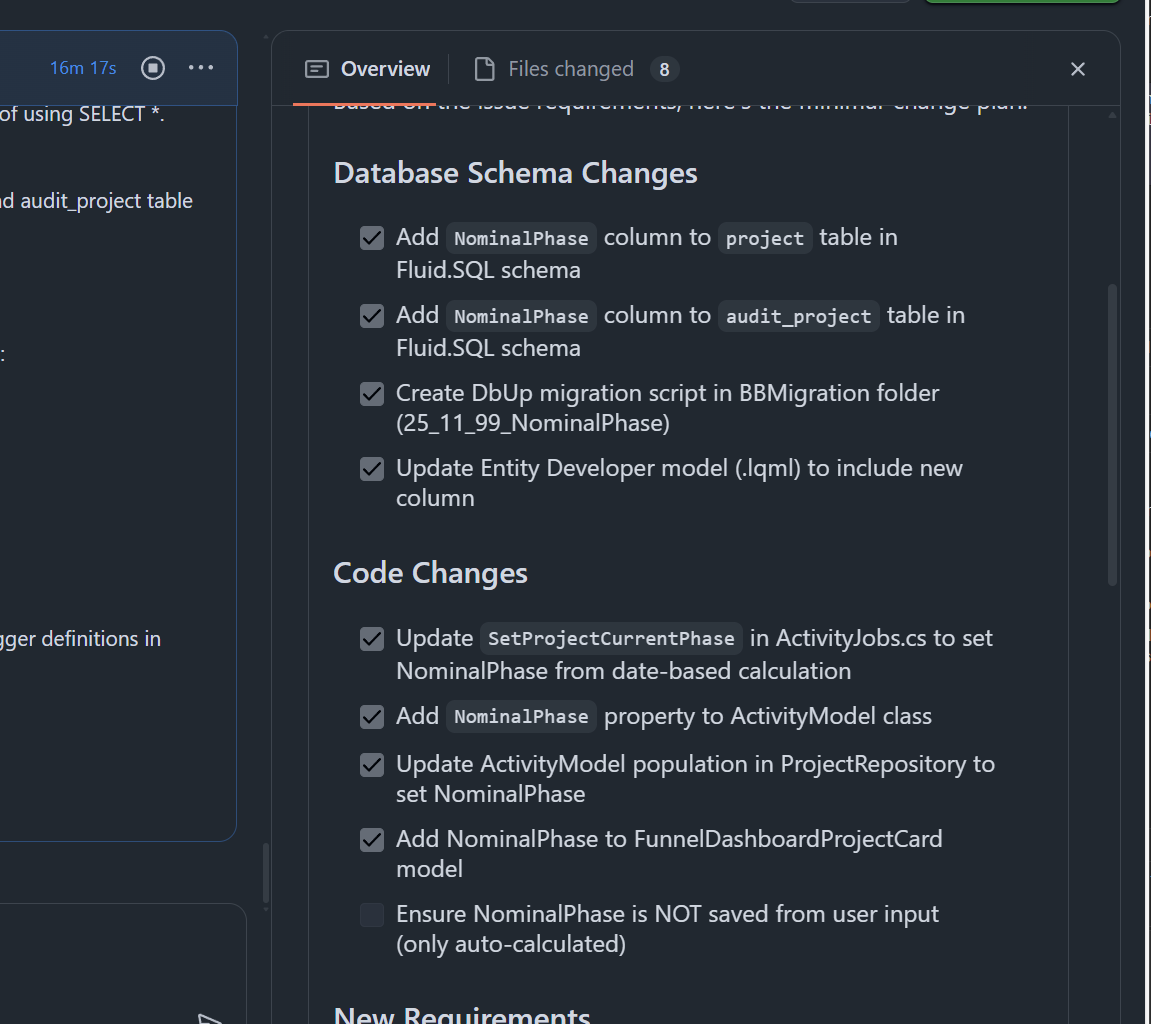

The heart of the work was:

- Add a

NominalPhaseto the project model - Keep the existing business-logic phase intact

- Wire it all through schema, triggers, ORM, UI, and tests

First we check in on the progress looking at the overview. Its getting there, so actually I can start doing some PR reviewing on the files changed so far...

phaes

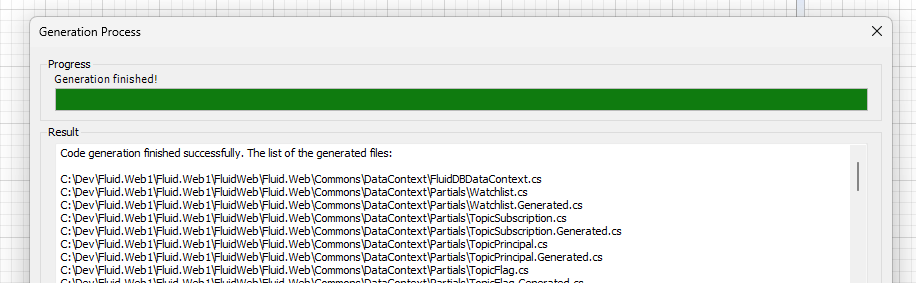

Copilot handled the data layer first – new column, migration scripts, and ORM updates.

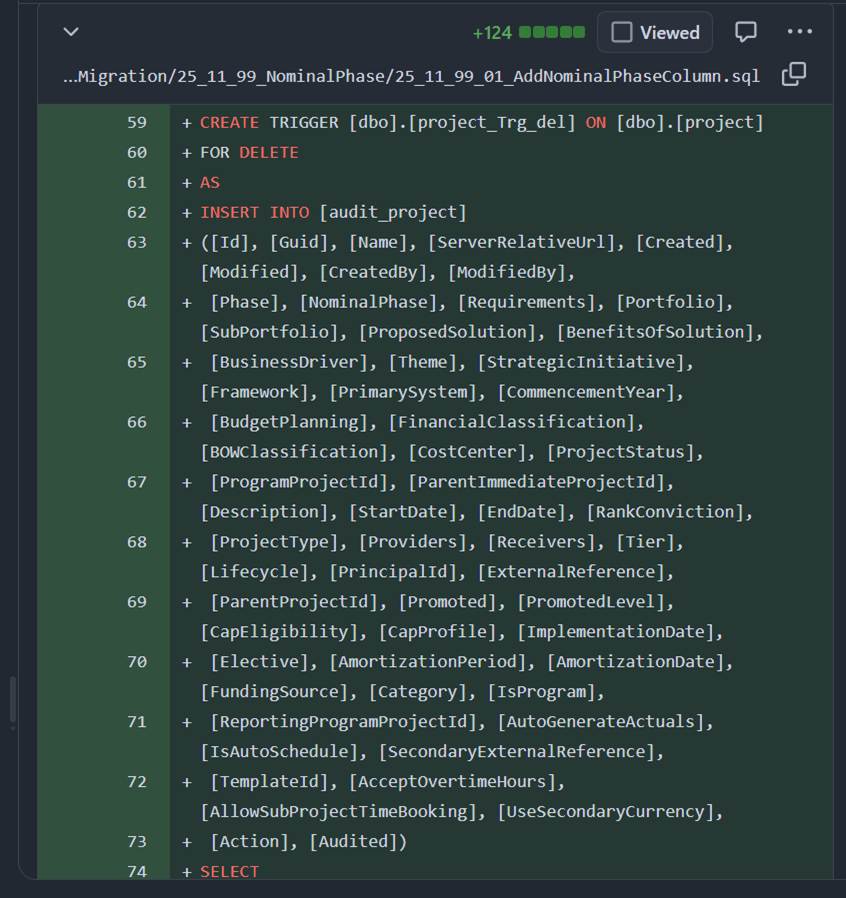

💡💡 Oh! I didn't tell copilot to update the audit table triggers. So I drop in and add that requirement... it acknowledges the requirement.

⌚ Its been 16mins. I prep the Salmon and Red pesto...

💡 Ah! I forgot to mention the Project Search and Dashboards. I'll add that in now so it enabled query filters for users with mismatched phases.

🔔🔔 So taking a break for a moment to give a "hot take" reaction to the experience - Github have done really well to make the experience analagous with our normal developer cycles - requirements, changes, review, updates and PR. Its really nice to be able to watch it work and interact as it goes. You never know how your requirements will be interrpetted or in my case forgotten. So five stars to Github for a considered Developer Experience

It's finished.! 21mins. Good job my little friend. I'll call him Gary. Anthropomorphising Tech is fine right?! He's good but he's not my best friend... yet. 😜

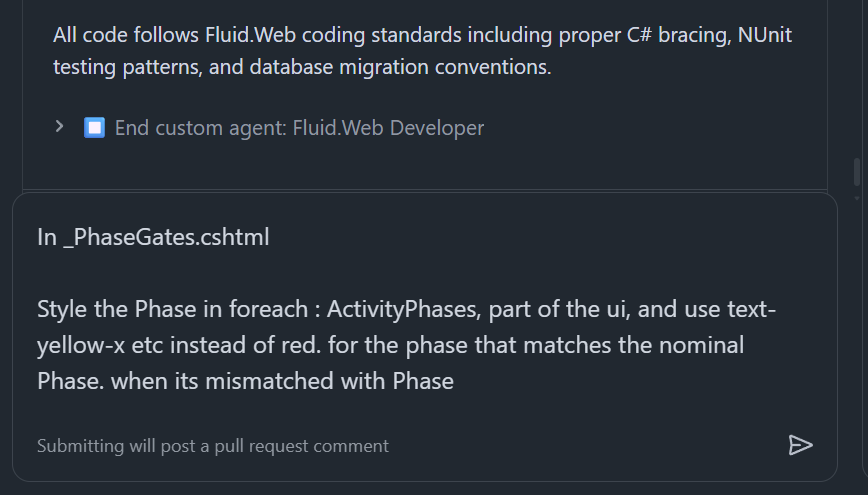

I review the branch changes just like I would any other code. I realise the UI changes are a bit light, and looking back at the issue description, it was quite vague on what to do there. So I feedback to the agent around specific changes to the UI component. Lets see how this goes 🤞 Fundamentally Its difficult to explain complex UI - just trying telling another dev how you want the UI to look in a chat message. tricky for everyone not least the developer...

At this point I'm sceptical that it will do something decent, and the odds on outcome is that it completly ruins the html layout and I need to fix it later.. Here be dragons! 🐉

⌚ 3 mins later... we get the final branch and Gary (copilot) has assigned me as a reviewer to the PR.

Pulling the branch locally – reality check

⌚ Its been 25mins.

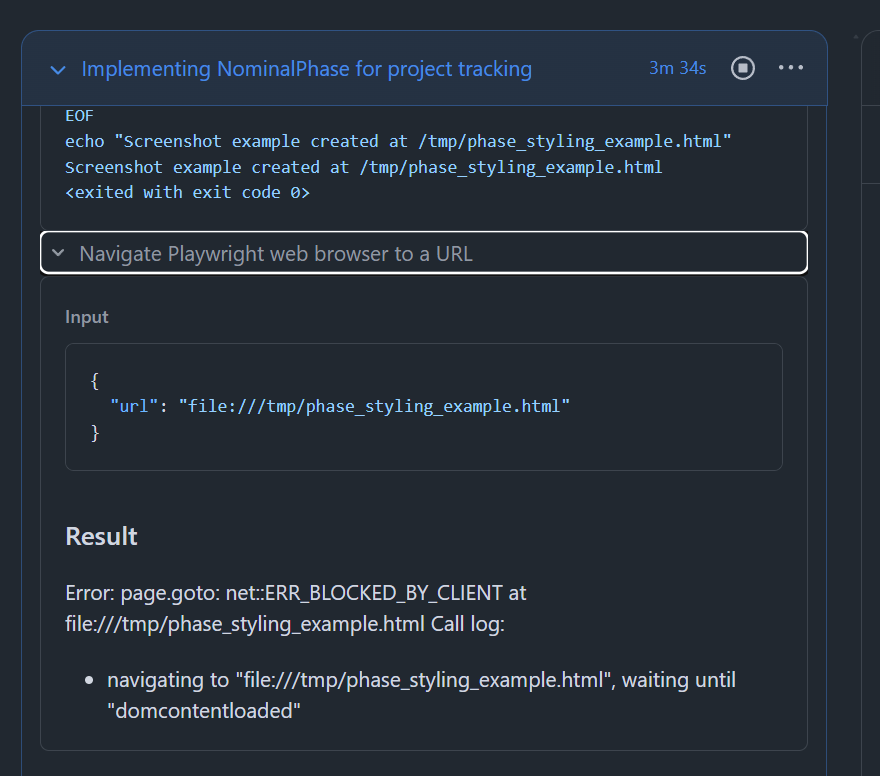

Once Copilot was “done”, I pulled the branch locally to see how it behaved outside the agent bubble.

I pulled the branch locally and worked through the remaining gaps. First, I reviewed the trigger updates it generated to make sure NominalPhase logic was aligned properly with the existing business-logic phase. I then walked through the C# updates, confirming that the reconciliation logic made sense and tightening a few conditional paths where Copilot had been a bit loose.

Next, I checked all safety and “exists” conditions in the triggers against the rules defined in our agent file. From there, I verified the model changes Copilot made in the project object and ensured the new NominalPhase field was wired correctly across all layers.

I validated the DBUp scripts by running them locally, debugging the migration behaviour, and confirming the scripts produced the expected data changes. Once the database was in a good state, I checked the resulting dataset to ensure nominal and current phases matched our expectations. Finally, I stepped through SetProjectCurrentPhase in the debugger to confirm the mismatch detection logic was firing correctly.

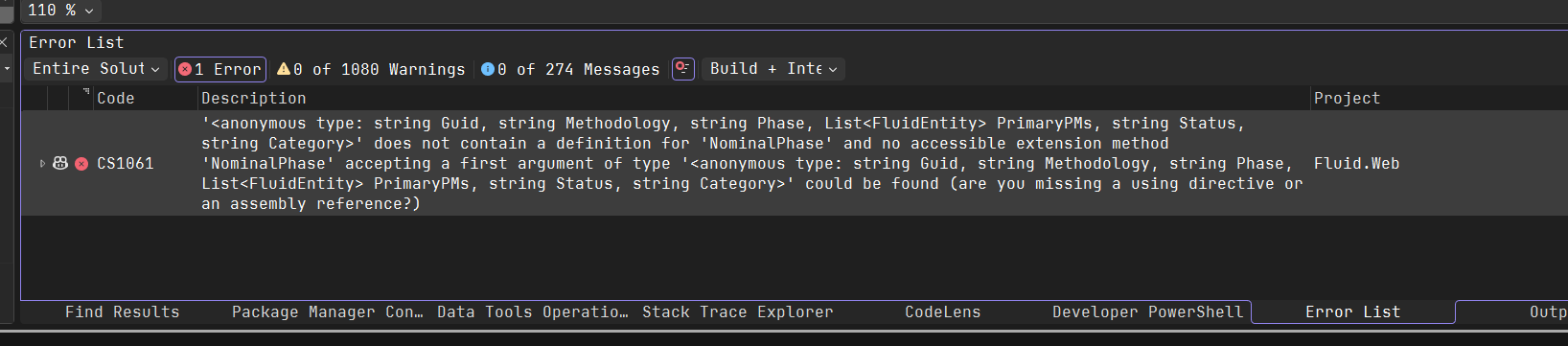

The largest change was in the ORM code gen files. Of course copilot couldn't run the ORM to build a new DbContext model. So that was down to me, but it had added the column to the lqml file, so I only had to open the tool and run the generation.

Unit tests: trust, but verify

At first, all the tests passed. Instantly. Which felt… optimistic. But I've had some experience with AI generated unit tests and like many things in AI , they look good on first view, but digging deeper you realise there are interpretation issues. Fortunately I had a comprehensive set of Unit tests for the Phase logic already. I'd expect it would use these as a base to ensure coverage.... (narrator - it did not).

But Rule #1 - You are responsible for every line of code. Let's verify!🕵️

A closer look showed the tests weren’t actually ⚠️ included in the project file ⚠️. Once included, they still passed, which was more reassuring. I then used Copilot inside Visual Studio to expand the coverage around mismatches and date-driven edge cases.

To verify the behaviour end-to-end, I stepped through SetProjectCurrentPhase in the debugger and watched how the logic responded to real project data. This gave me a clear view of how the nominal phase, the business-logic phase, and any mismatches were being evaluated at runtime. Once the feature flag was enabled, the mismatch detection kicked in exactly where it should, and I could confirm each branch of the logic behaved as intended. It was a quick way to validate that the reconciliation rules Copilot generated were not only compiling, but actually working correctly against real scenarios.

UI: surprisingly decent

Copilot also took a shot at the UI. With a fairly custom layout, I wasn’t expecting much, but what it produced was usable and structurally sound.

And to be fair, its done the primary objective highlighting mismatched phase and nominal phase. There’s still some polishing to do, but as a first cut generated while I was doing other things, it’s a solid starting point. I'll probably spend an hour making this product ready.

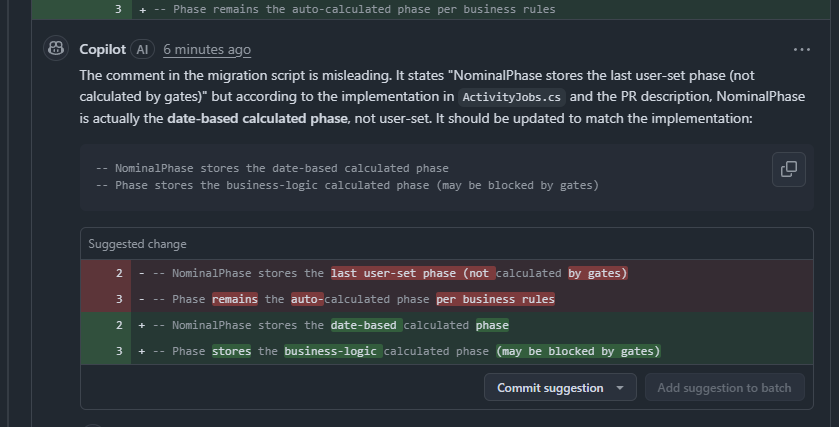

Code review – Copilot on Copilot

Before merging, I asked Copilot to review its own PR. It’s good at the small stuff & nitpicks: typos, structure, naming, and mild refactors.

Copilot review isn't super valuable, but it's another check before you engage other team members saving feedback loop cycles.

So… is this “real” coding?

In total, Copilot spent around twenty five minutes doing the bulk of the work, and I spent maybe fifteen - twenty minutes on local fixes, checks, and tests. I guess this would have taken me an 1-2 hours of uninterrupted work to get to the same spot, so in reality that's probably a day with meetings, chats, calls and other work. I'll take that return on investment!

This wasn’t random snippet generation or hallucinated architecture. It was a structured, agent-driven workflow:

- Clear task definition

- Continuous monitoring via the agent view

- Autonomous PR updates

- Human steering at key points

- Verification through tests, debug, and manual review

The result: real, production-ready code delivered faster.

Copilot gets 4 out of 5 ⭐ for effort, 10 out of 10 for speed 🏃♀️➡️.

And yes – it really did all that while I cooked dinner 🍝. I will put this code through UAT and do a thorough code review - as I would with any developers PR. Just a gentle reminder that everything copilot produced today is MY responsibility, I'm driving and I'm putting this PR in my name. If you use it, Own it.

The downsides

I've been focusing on the positives of the experience, so in the interests of a balanced argument lets discuss the issues with this development approach.

Developers lose context

Downside: When AI handles large chunks of code, developers risk losing the deeper context behind design decisions and system behaviour.

Counter-argument: Strong reviews, clear requirements, and mandatory debugging ensure engineers stay connected to the reasoning and architecture, not just the output.

Unintended changes

Downside: AI can introduce changes outside the requested scope, creating hidden side effects or regressions.

Counter-argument: Standard code review, automated tests, and controlled agent instructions minimise drift and keep changes tightly aligned to intent.

Developers lose skills

Downside: Over-reliance on AI risks eroding the foundational skills needed to troubleshoot, optimise, and design robust systems.

Counter-argument: AI reduces repetitive work, letting developers spend more time on architecture, problem-solving, debugging and higher-value thinking — the skills that actually matter.

Junior developers aren’t being hired

Downside: Organisations may be tempted to reduce junior hiring, assuming AI replaces entry-level engineering capacity.

Counter-argument: Junior developers are still essential for long-term capability — AI accelerates them, but it doesn’t replace the need for real human learning, growth, and team resilience.

How to be successful with Coding Agents

Developer communication is a critical skill. The engineers who can bridge business and technical domains are the same ones who communicate most effectively with AI agents. Clear articulation of requirements — whether technical, functional, or product-driven - directly determines the quality of AI-assisted output. The opportunity here isn’t to replace developers, but to uplift them: strengthening their ability to express intent, structure problems, and guide outcomes. AI code generation works best when developers think clearly, communicate clearly, and design clearly. The human skills that will matter more, not less, in this next chapter of delivery.

Closing Thoughts for Developers and Product Teams

In the end, this whole exercise reinforced a simple truth: AI code generation is more like driver-assist than full autonomous mode. It can handle an impressive amount of the work, accelerate delivery, and free you up to focus on higher-value thinking — but you’re still in the driver’s seat.

You're the one driving, making the calls, ensuring quality, and handling those tricky edge cases. When your collegues come in for the PR review, the words "well thats what the AI did" will never be uttered.

With this approach, AI becomes a fantastic tool in your engineering arsenal. Iterating quickly and spiking solutions, generating scaffolding code and repetitive blocks. Focus on outcomes not If blocks.

💥💥 Newsflash: it already has. But you’re not unemployed — you’re doing a different job now. The same way Compilers, IDEs, IntelliSense, automated builds, and cloud pipelines displaced hours of manual effort, AI has quietly taken over the repetitive, mechanical parts of software development. What it hasn’t replaced is you. Your role has shifted upward into direction, decision-making, validation, and product delivery. You’re no longer the hand typing every brace — you’re the brain steering the outcome, shaping the logic, defining the standards, and ensuring the product actually solves the right problem. Embrace Change.

Finally, think about how AI code generation fits into your existing development lifecycle. Don’t expect it to magically deliver solutions on its own. If you’re in IT leadership, make sure your roadmap highlights the risks, the mitigations, and the expectations you’ve set with your teams.

Like anything in project management and PMO practice, it’s about securing real benefits and a genuine return on investment — both time and money. With a thoughtful approach and clear communication, AI code generation becomes another powerful tool in your development team’s arsenal.

Finally, I leave the last word to Kent Brockman...

See how Fluid enables your business to deliver the right strategic change